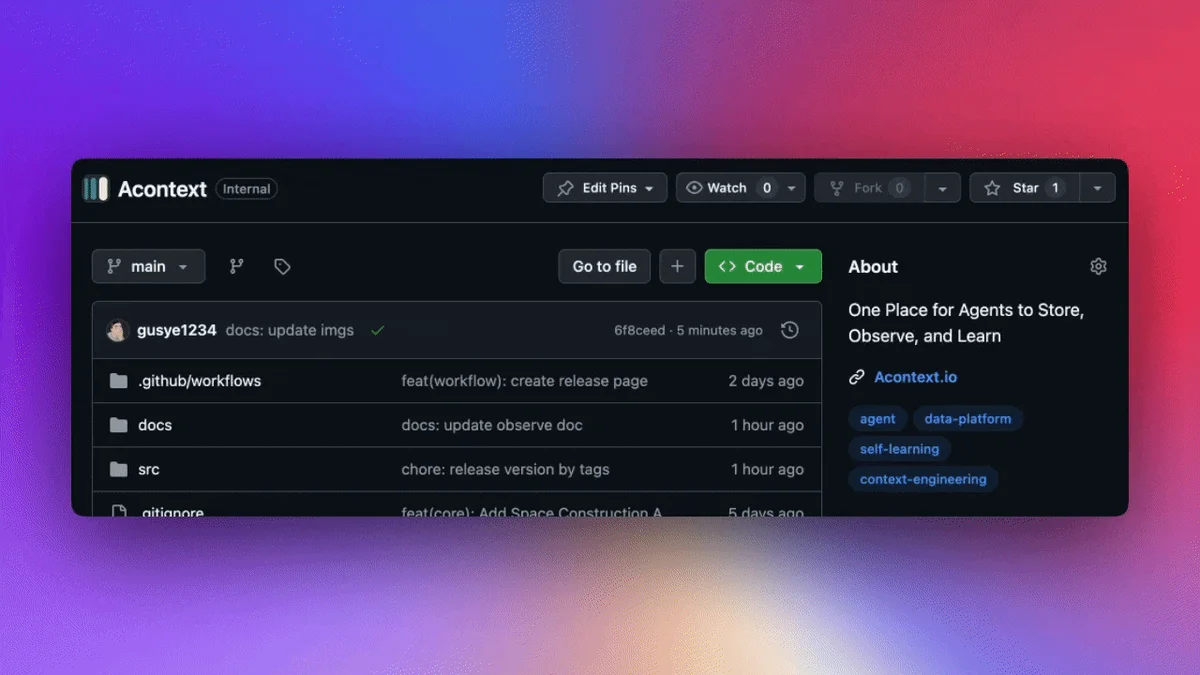

Type: GitHub Repository Original Link: https://github.com/memodb-io/Acontext Publication Date: 2026-01-19

Summary #

Introduction #

Imagine managing a technical support team for an e-commerce company. Every day, you receive thousands of support requests from customers who have issues with their orders, payments, or accounts. Each request is unique and often requires a personalized response. However, your support agents must navigate through a myriad of different types of documents, including technical manuals, FAQs, and transaction logs, to find the right solution. This process is slow and inefficient and often leads to incorrect or incomplete responses.

Now, imagine having a system that not only stores all this information in a structured way but also learns from past successes and errors. A system that can observe real-time interactions, adapt to the specific needs of each customer, and continuously improve. This is exactly what Acontext offers, a data platform for context engineering that revolutionizes the way we build and manage AI agents.

Acontext solves the problem of context management in an innovative way, offering advanced tools for storing, observing, and learning contextual data. Thanks to Acontext, your support agents can respond to customer requests more quickly and accurately, improving the user experience and reducing the team’s workload.

What It Does #

Acontext is a data platform designed to facilitate context engineering, a crucial field for the development of intelligent and autonomous AI agents. In simple terms, Acontext helps you build agents that can understand and manage the context of user interactions, making responses more relevant and useful.

The platform offers advanced features for storing, observing, and learning contextual data. You can think of it as an intelligent archive that not only stores information but organizes it in a way that makes it easily accessible and usable. For example, if a support agent needs to respond to a request about a payment issue, Acontext can quickly retrieve all relevant information, such as refund policies, transaction logs, and FAQs, to provide a complete and accurate response.

Acontext supports a wide range of data types, including LLM (Large Language Models) messages, images, audio, and files. This means you can use the platform to manage any type of contextual information, making your agents more versatile and powerful.

Why It’s Amazing #

The “wow” factor of Acontext lies in its ability to manage context dynamically and contextually, offering advanced tools for observation and learning. Here are some of the key features that make Acontext amazing:

Dynamic and contextual:

Acontext is not just a simple data archive. The platform uses advanced algorithms to organize and retrieve information contextually, making the agents’ responses more relevant and useful. For example, if a customer asks for information about a payment issue, Acontext can quickly retrieve all relevant information, such as refund policies, transaction logs, and FAQs, to provide a complete and accurate response. “Hello, I am your system. Service X is offline, but we can resolve the issue by following these steps…”

Real-time reasoning:

One of the biggest advantages of Acontext is its ability to observe and adapt in real-time. The platform monitors interactions between agents and users, analyzing contextual data to continuously improve responses. This means your agents can learn from past successes and errors, becoming more effective over time. For example, if a support agent receives a request about a payment issue, Acontext can analyze previous interactions to provide a more accurate and relevant response.

Observability and continuous improvement:

Acontext offers advanced observability tools, allowing you to monitor agent performance in real-time. You can see which tasks are being performed, what the success rates are, and where there is room for improvement. This allows you to continuously optimize agent performance, improving the user experience and reducing the team’s workload. For example, if you notice that a certain type of request is being handled inefficiently, you can use Acontext data to identify the problem and make the necessary changes.

Improved user experience:

Thanks to its ability to manage context dynamically and contextually, Acontext significantly improves the user experience. Agents can provide more relevant and useful responses, reducing wait times and improving customer satisfaction. For example, if a customer asks for information about a payment issue, Acontext can quickly retrieve all relevant information, such as refund policies, transaction logs, and FAQs, to provide a complete and accurate response.

How to Try It #

To get started with Acontext, follow these steps:

-

Clone the repository: You can find the Acontext source code on GitHub at the following address: https://github.com/memodb-io/Acontext. Clone the repository to your computer using the command

git clone https://github.com/memodb-io/Acontext.git. -

Prerequisites: Make sure you have Go, Python, and Node.js installed on your system. Acontext supports various data storage platforms, including PostgreSQL, Redis, and S3. Configure these platforms according to your needs.

-

Setup: Follow the instructions in the

README.mdfile to configure the development environment. This includes installing dependencies and configuring the necessary environment variables. -

Documentation: The main documentation is available in the GitHub repository. You will find detailed guides on how to use the various features of Acontext, as well as code examples and best practices.

-

Usage examples: In the repository, you will find several usage examples that will help you understand how to implement Acontext in your applications. For example, you can find examples of how to handle technical support requests, monitor agent performance, and improve the user experience.

There is no one-click demo, but the setup process is well-documented and supported by an active community. If you have questions or encounter problems, you can join the Acontext Discord channel for assistance: https://discord.acontext.io.

Final Thoughts #

Acontext represents a significant step forward in the field of context engineering, offering advanced tools for storing, observing, and learning contextual data. The platform is designed to improve the efficiency and effectiveness of AI agents, making user interactions more relevant and useful.

In the broader tech ecosystem, Acontext positions itself as an innovative solution for context management, offering significant advantages for companies looking to improve the user experience and optimize operations. Acontext’s ability to observe and adapt in real-time, along with its advanced observability, makes it a valuable tool for any development team.

In conclusion, Acontext is not just a data platform but a true partner for building intelligent and autonomous AI agents. Its potential is enormous, and we are excited to see how it will continue to evolve and revolutionize the way we manage context. Join the Acontext community and discover how you can take your application to the next level.

Use Cases #

- Private AI Stack: Integration into proprietary pipelines

- Client Solutions: Implementation for client projects

- Development Acceleration: Reduction of time-to-market for projects

Resources #

Original Links #

- GitHub - memodb-io/Acontext: Data platform for context engineering. Context data platform that stores, observes and learns. Join - Original Link

Article recommended and selected by the Human Technology eXcellence team, processed through artificial intelligence (in this case with LLM HTX-EU-Mistral3.1Small) on 2026-01-19 10:54 Original source: https://github.com/memodb-io/Acontext

Related Articles #

- GitHub - Search code, repositories, users, issues, pull requests…: 🔥 A tool to analyze your website’s AI-readiness, powered by Firecrawl - Code Review, AI, Software Development

- GitHub - NevaMind-AI/memU: Memory infrastructure for large language models and AI agents - AI, AI Agent, LLM

- GitHub - different-ai/openwork: An open-source alternative to Claude Cowork, powered by OpenCode. - AI, Typescript, Open Source